Cloud Functions Logging

Overview

This documentation provides a detailed walkthrough on how to set up a Google Cloud Function to send the logs directly to SigNoz. By the end of this guide, you will have a setup that automatically sends your Cloud Function logs to SigNoz.

Here's a quick summary of what we will be doing in this guide

- Create and configure a Cloud Function

- Create Pub/Sub topic

- Setup Log Router to route the Cloud Functions logs to SigNoz

- Create Compute Engine instance

- Create OTel Collector to route logs from Pub/Sub topic to SigNoz Cloud

- Invoke the Cloud Function using Trigger

- Send and Visualize the logs in SigNoz Cloud

Prerequisites

- Google Cloud account with administrative privilege or Cloud Functions Admin privilege.

- SigNoz Cloud Account (we are using SigNoz Cloud for this demonstration, we will also need ingestion details. To get your Ingestion Key and Ingestion URL, sign-in to your SigNoz Cloud Account and go to Settings >> Ingestion Settings)

- Access to a project in GCP

- Google Cloud Functions APIs enabled (follow this guide to see how to enable an API in Google Cloud)

Get started with Cloud Function Configuration

Follow these steps to create the Cloud Function:

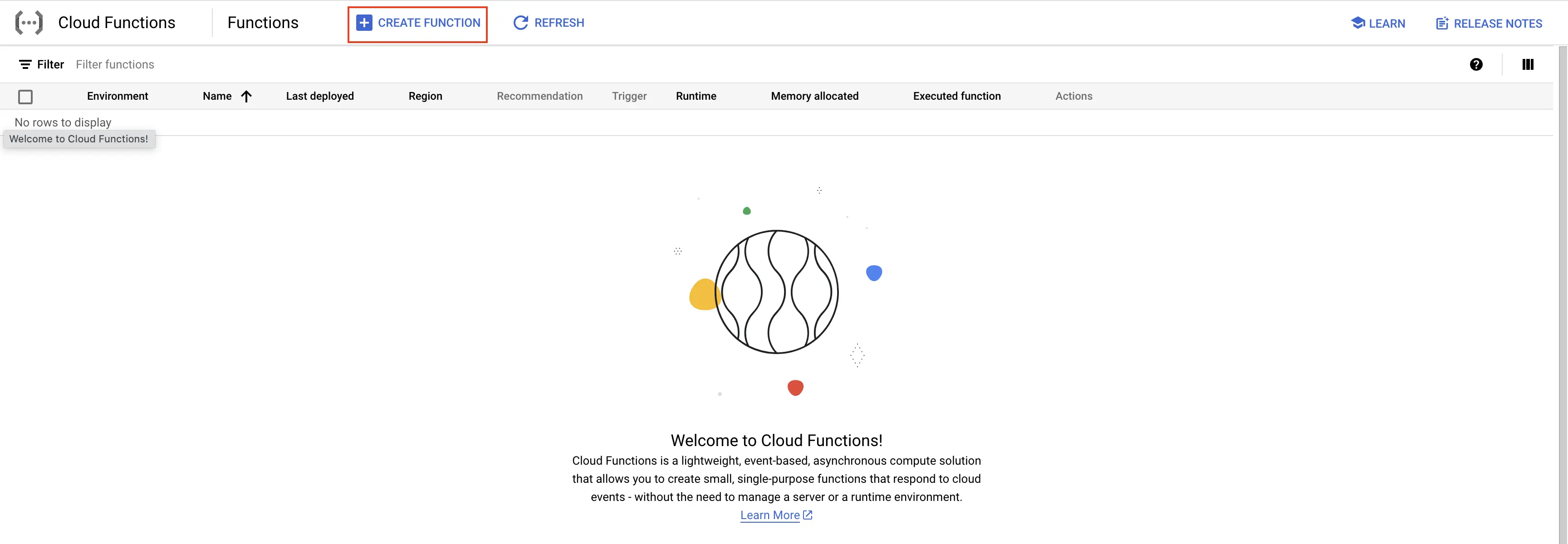

Step 1: Go to your GCP console and search for Cloud Functions, go to Functions and click on CREATE FUNCTION.

GCP Cloud Functions

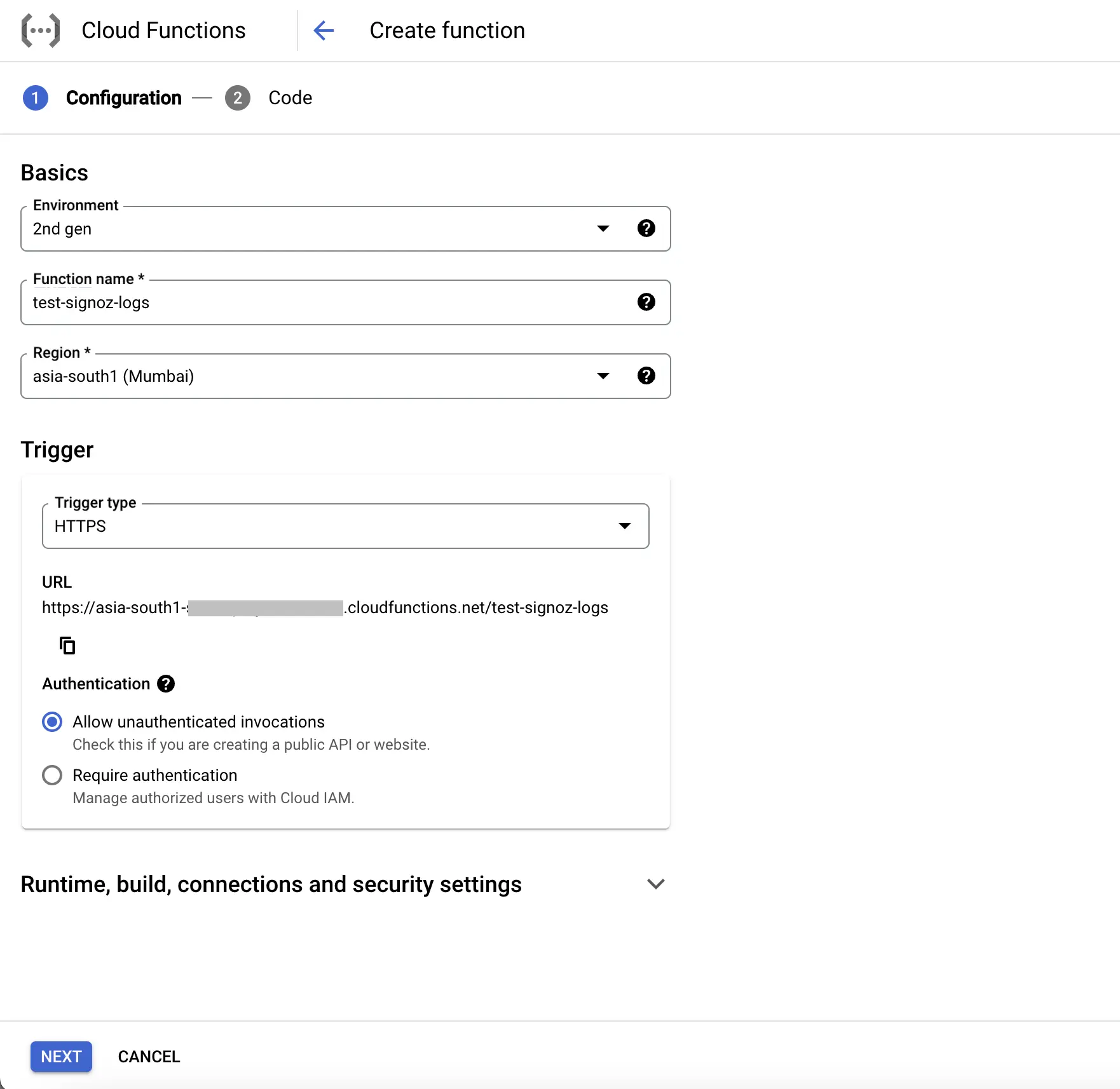

Step 2: Fill in the following details to create a Cloud Function:

- Environment: 2nd gen

- Function name: Name for the Cloud Function

- Region: Takes the default region of the GCP account

- Trigger: Defines how to trigger the Cloud Function

- Trigger Type: HTTPS - this allows us to trigger the Cloud Function using a URL

- Authentication: Choose whether you need authenticated or unauthenticated invocations. We have chosen unauthenticated invocation for this demonstration.

Create Cloud Function

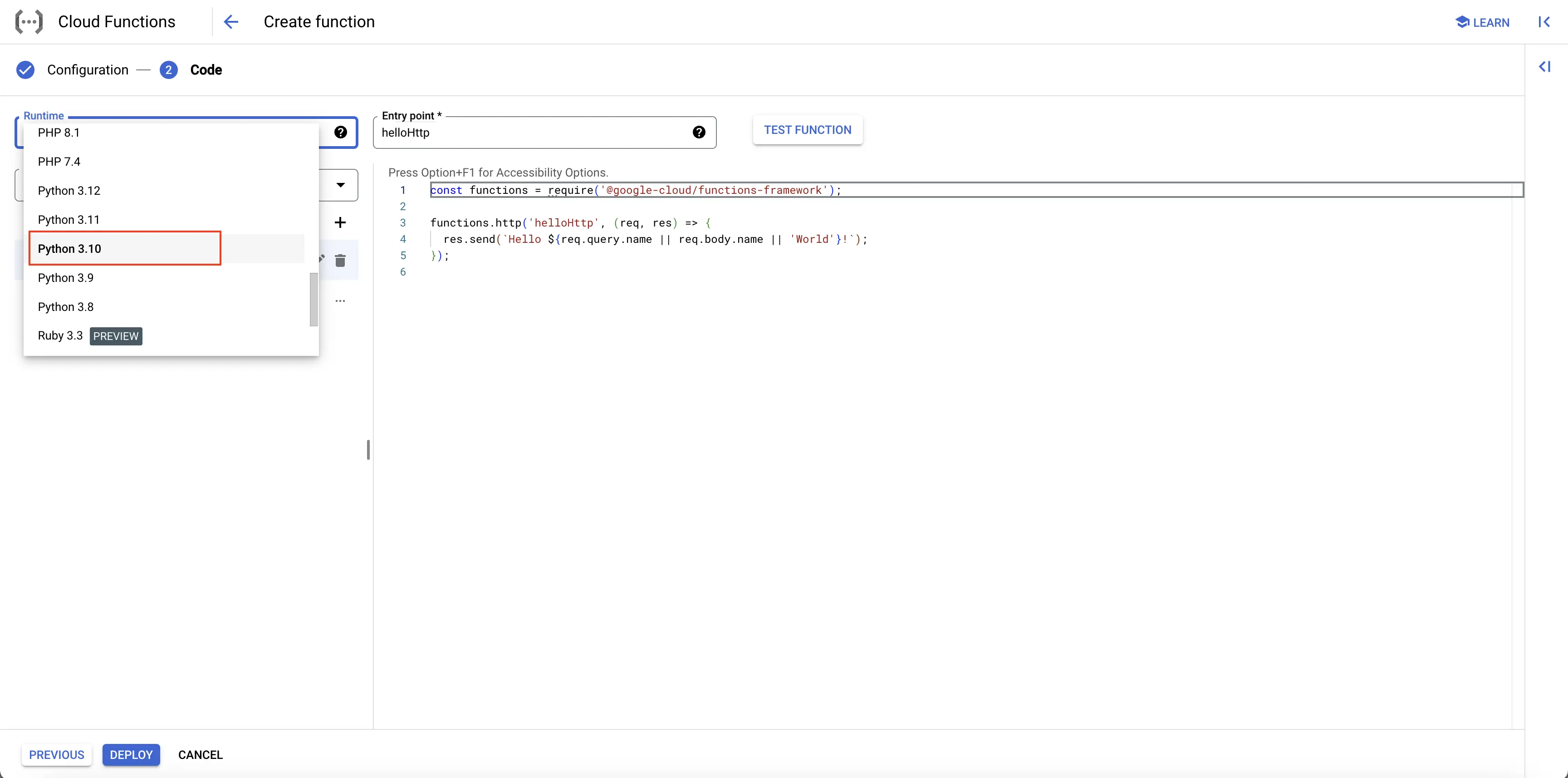

Step 3: Click on the NEXT button, which will bring us to the page where we can add our code.

Select Runtime as Python 3.10.

Set entrypoint and source code

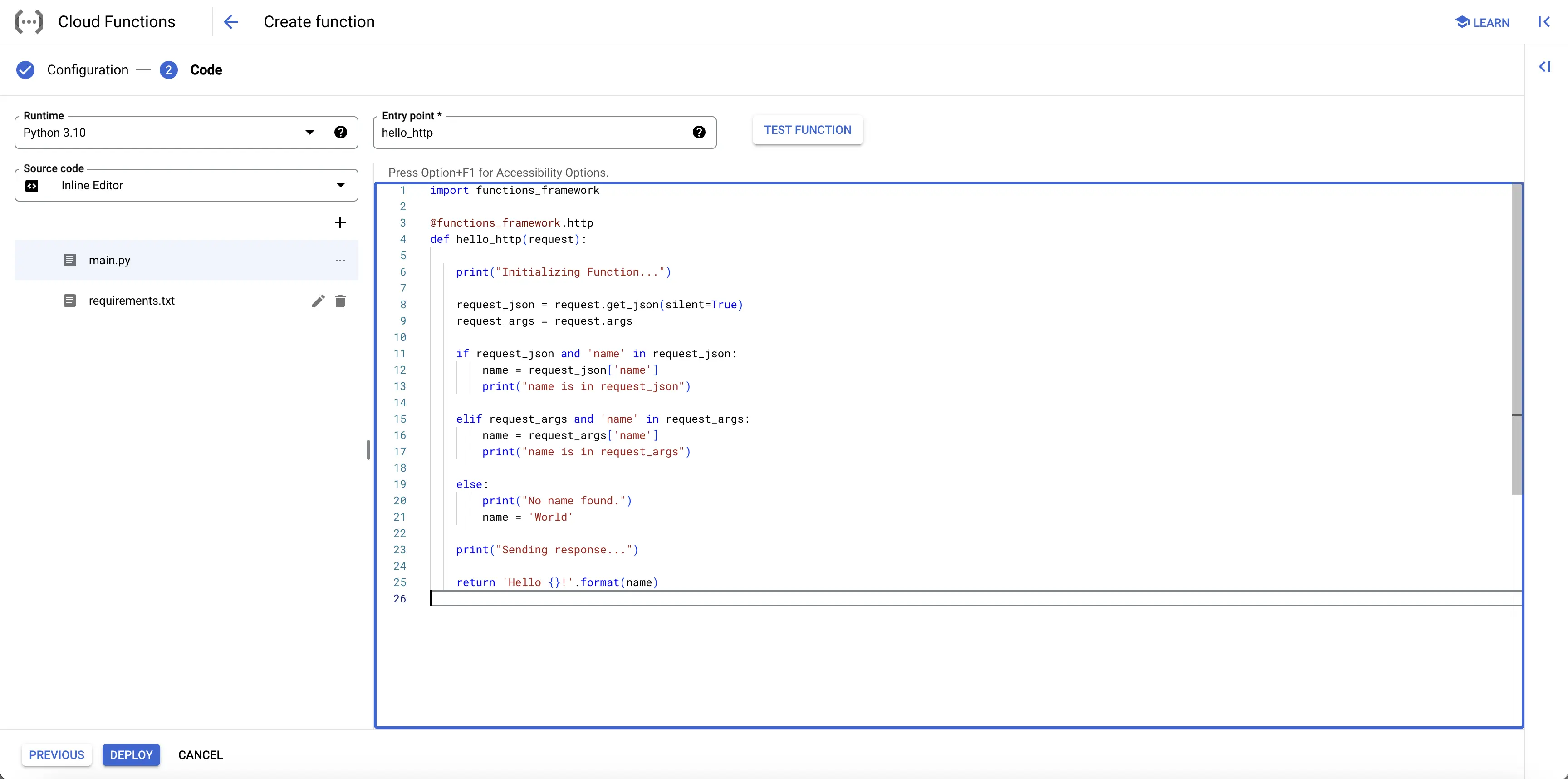

Add code to the Google Cloud Function

Below is the comprehensive code of the main.py file, followed by a high level overview of what the code is doing.

import functions_framework

@functions_framework.http

def hello_http(request):

print("Initializing Function...")

request_json = request.get_json(silent=True)

request_args = request.args

if request_json and 'name' in request_json:

name = request_json['name']

print("name is in request_json")

elif request_args and 'name' in request_args:

name = request_args['name']

print("name is in request_args")

else:

print("No name found.")

name = 'World'

print("Sending response...")

return 'Hello {}!'.format(name)

Below is a high-level overview of the above code snippet:

- hello_http(request): Handles incoming HTTP requests, extracts 'name' from the request, and logs various stages of execution.

- @funtions_framework.http: Decorator that defines

hello_httpas an HTTP handler for Google Cloud Functions. - The code print out different messages for log entries and returns a greeting message.

There are no changes to requirements.txt file.

Once you’ve finished writing your code, locate the DEPLOY button. After clicking the DEPLOY button, Google Cloud Function initiates deployment, starts provisioning the function according to the specified configuration, initializes the environment, handles dependencies, and makes the function ready to handle incoming requests.

Complete Cloud Function Code

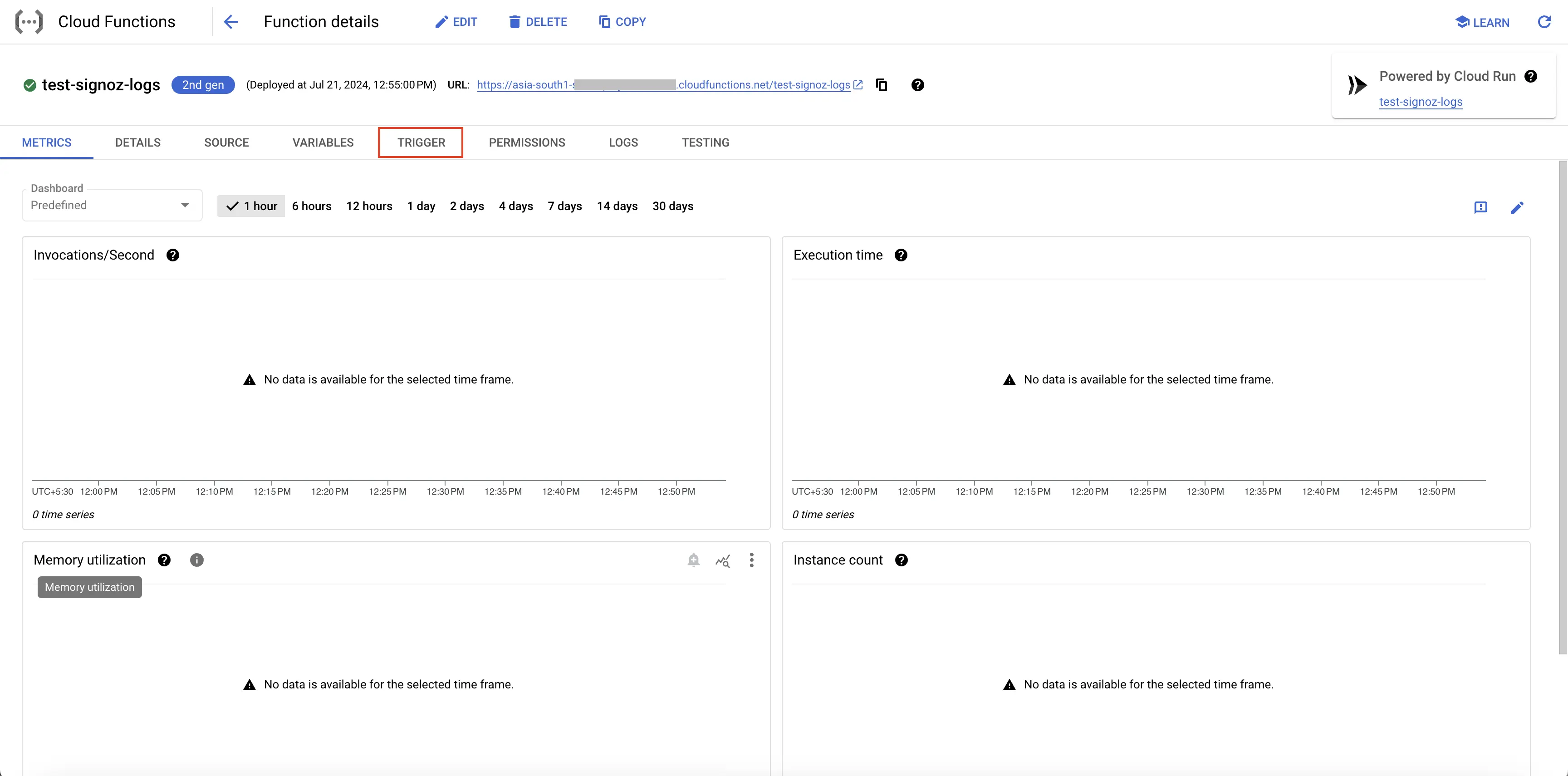

Test your Cloud Function

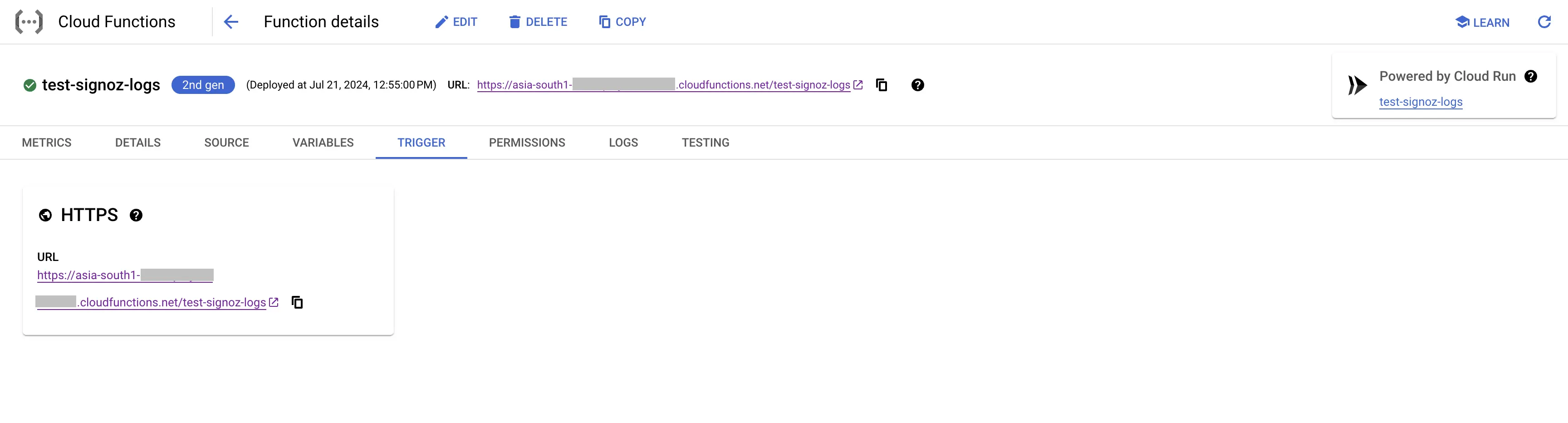

Step 1: After completing the deployment, navigate to the TRIGGER section to obtain the URL to invoke the function.

Navigate to Trigger

Step 2: Hit the URL that you have obtained, you will see the function output.

Cloud Function URL

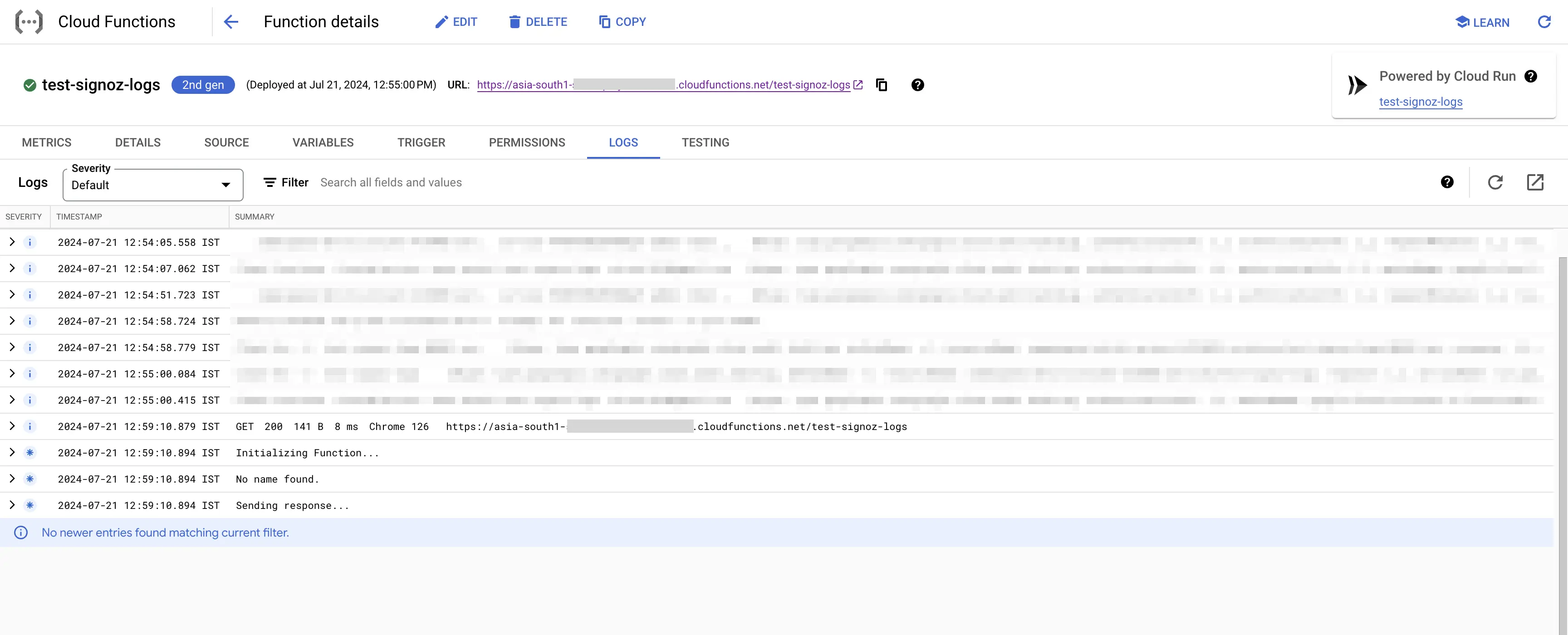

Step 3: Click on the LOGS section in your Cloud Function to view the logs, which will indicate that the log has been sent to SigNoz successfully.

Viewing Cloud Function Logs

Create Pub/Sub topic

Follow the steps mentioned in the Creating Pub/Sub Topic document to create the Pub/Sub topic.

Setup Log Router to Pub/Sub Topic

Follow the steps mentioned in the Log Router Setup document to create the Log Router.

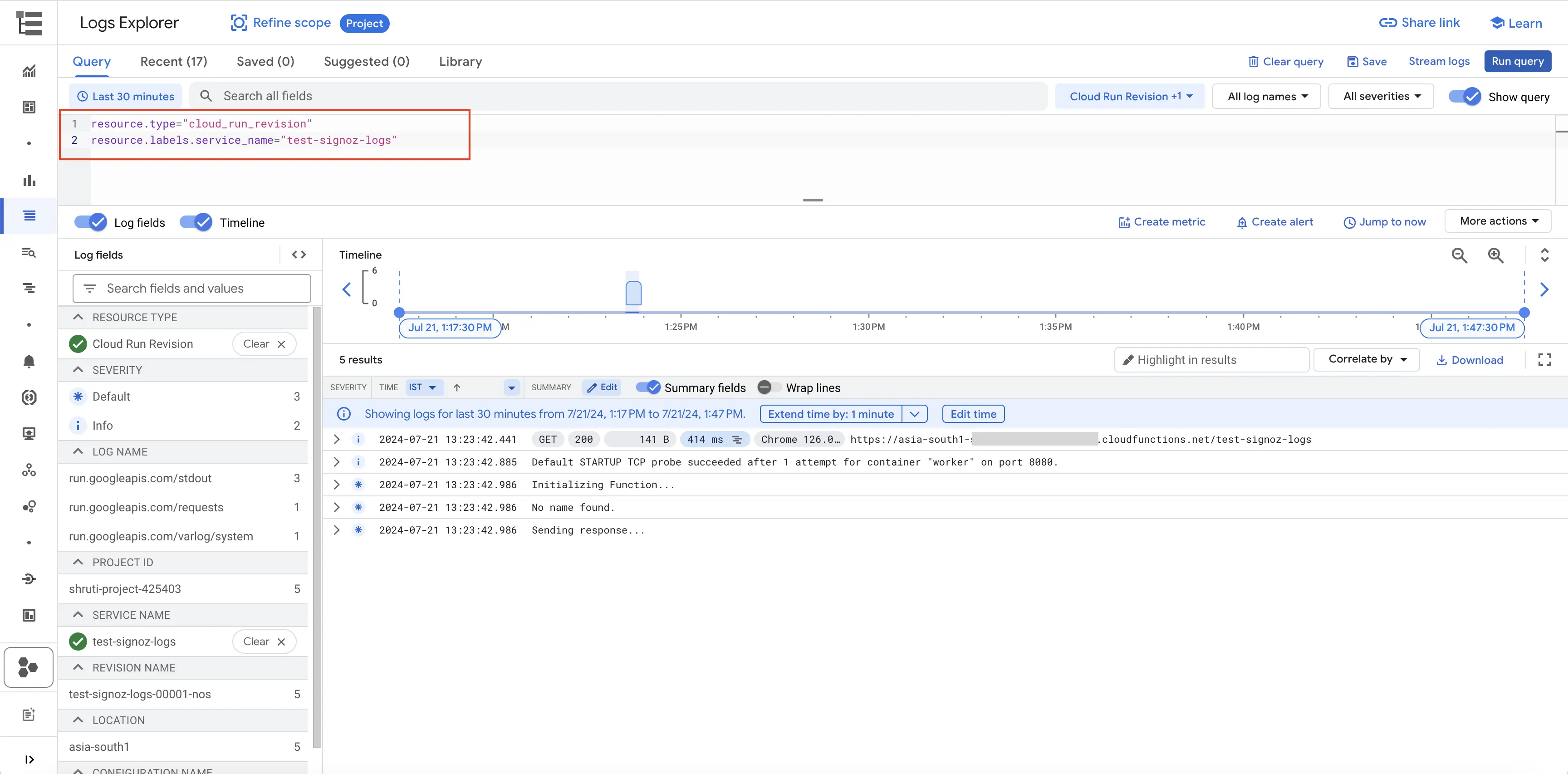

To ensure you filter out only the Cloud Function logs, use the following filter conditions:

resource.type="cloud_run_revision"

In case you want the logs only from a particular Cloud Function, you can add the following filter conditions in the Query text box:

resource.type="cloud_run_revision"

resource.labels.service_name="<function-name>"

Filter Cloud Functions Logs

Setup OTel Collector

Follow the steps mentioned in the Creating Compute Engine document to create the Compute Engine instance.

Install OTel Collector as agent

Firstly, we will establish the authentication using the following commands:

- Initialize

gcloud:

gcloud init

- Authenticate into GCP:

gcloud auth application-default login

Let us now proceed to the OTel Collector installation:

Step 1: Download otel-collector tar.gz for your architecture

wget https://github.com/open-telemetry/opentelemetry-collector-releases/releases/download/v0.88.0/otelcol-contrib_0.88.0_linux_amd64.tar.gz

Step 2: Extract otel-collector tar.gz to the otelcol-contrib folder

mkdir otelcol-contrib && tar xvzf otelcol-contrib_0.88.0_linux_amd64.tar.gz -C otelcol-contrib

Step 3: Create config.yaml in the folder otelcol-contrib with the below content in it. Replace <region> with the appropriate SigNoz Cloud region. Replace SIGNOZ_INGESTION_KEY with what is provided by SigNoz:

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

googlecloudpubsub:

project: <gcp-project-id>

subscription: projects/<gcp-project-id>/subscriptions/<pubsub-topic's-subscription>

encoding: raw_text

processors:

batch: {}

exporters:

otlp:

endpoint: "ingest.<region>.signoz.cloud:443"

tls:

insecure: false

headers:

"signoz-ingestion-key": "<SigNoz-Key>"

service:

pipelines:

traces:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

metrics:

receivers: [otlp]

processors: [batch]

exporters: [otlp]

logs:

receivers: [otlp, googlecloudpubsub]

processors: [batch]

exporters: [otlp]

Step 4: Once we are done with the above configurations, we can now run the collector service with the following command:

From the otelcol-contrib, run the following command:

./otelcol-contrib --config ./config.yaml

Run in background

If you want to run OTel Collector process in the background:

./otelcol-contrib --config ./config.yaml &> otelcol-output.log & echo "$!" > otel-pid

The above command sends the output of the otel-collector to otelcol-output.log file and prints the process id of the background running OTel Collector process to the otel-pid file.

If you want to see the output of the logs you’ve just set up for the background process, you may look it up with:

tail -f -n 50 otelcol-output.log

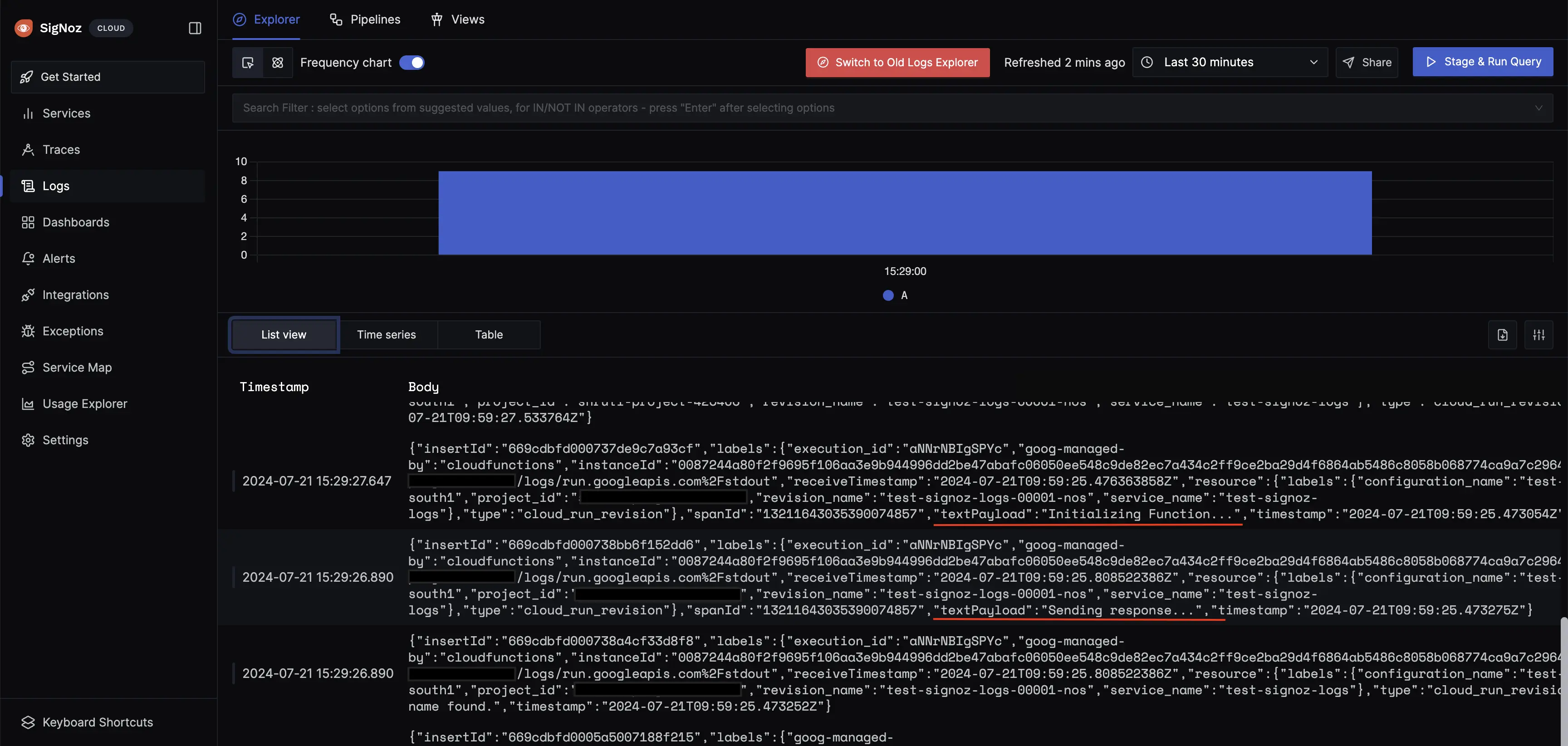

You can now trigger the Cloud Function a few times, and see the logs from the GCP Cloud Functions on SigNoz.

Functions Logs in SigNoz Cloud